speech recognition across south african accents PDF

Preview speech recognition across south african accents

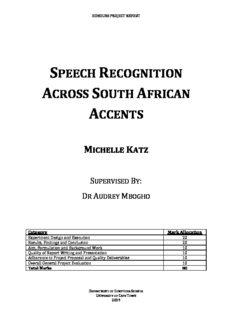

HONOURS PROJECT REPORT S R PEECH ECOGNITION A S A CROSS OUTH FRICAN A CCENTS M K ICHELLE ATZ S B : UPERVISED Y D A M R UDREY BOGHO Category Mark Allocation Experiment Design and Execution 20 Results, Findings and Conclusion 20 Aim, Formulation and Background Work 10 Quality of Report Writing and Presentation 10 Adherence to Project Proposal and Quality Deliverables 10 Overall General Project Evaluation 10 Total Marks 80 DEPARTMENT OF COMPUTER SCIENCE UNIVERSITY OF CAPE TOWN 2009 Abstract Speech recognition systems rarely perform well as their recognition rates are so easily influenced by the speaker and the manner and environment in which words are spoken. It is for this reason that accents can decrease the ability of a speech recognition system in accurately recognizing words. In South Africa there are 11 official languages which suggests a real need to improve the accuracy levels of English recognizers when dealing with a variety of accents. In this paper two recognition models are created and compared in terms of recognition rates. The first model uses only English accent speakers to train the recognizer, whereas the second uses speakers with a variety of South African accents to train the recognizer. The models were created using HTK, a speech toolkit created by Cambridge University, which uses Hidden Markov Models (HMMs) to model time series. Using HTK it was possible to create speech data for training the models and perform acoustic analysis on the data. HMMs were then defined and trained. A dictionary and grammar were also created in order to be used by the recognizer. When comparing these two models, it was found that the second, accented model performs slightly better than the English model. However, this difference was found by the Analysis of Variance (ANOVA) test to lack statistical significance. The recognition rates of this accented model were then compared to the results of individual models trained for each specific accent group. The results of the individual models were slightly better than those of the global model. However, the difference was found to lack statistical significance. Further testing with larger amounts of data is recommended. The results of this report indicate a need to find other aspects of inaccuracy which may be less costly to remove and possibly better improve recognition rates. Keywords: Speech Recognition, Hidden Markov Models, HTK, Signal Processing, Computational Linguistics Acknowledgments I would like to thank my supervisor Dr Audrey Mbogho for her support, advice and motivation through the duration of this project. I would also like to thank Mr. Ben Murrell for his help in understanding Hidden Markov Models and for all his advice as to the direction of the project. Thank you to Dr Juwa Nyirenda, whose explanation of the statistical process in analyzing my results was invaluable. I would like to express my appreciation for my two project partners Neann Mathai and Heather Sobey for their company, assistance and support. Lastly, I would like to thank the anonymous participants who gave their time and voices in creating the speech data used to train and test the recognizers. T C ABLE OF ONTENTS List of Illustrations .................................................................................................................................................................................. ii 1 Introduction ..................................................................................................................................................................................... 1 2 Background ...................................................................................................................................................................................... 3 2.1 Speech Recognition ........................................................................................................................................................... 3 2.1.1 Basic Speech and Phonology ................................................................................................................................... 3 2.1.2 Methods ............................................................................................................................................................................ 4 2.1.3 Accents and Dialects ................................................................................................................................................... 5 2.1.4 Performance ................................................................................................................................................................... 5 2.1.5 Applications .................................................................................................................................................................... 6 2.1.6 Limitations and Challenges ...................................................................................................................................... 6 2.2 Hidden Markov Models ................................................................................................................................................... 7 2.3 HTK (HMM Toolkit) .......................................................................................................................................................... 7 2.4 South African Languages ................................................................................................................................................ 7 2.4.1 Bantu Languages........................................................................................................................................................... 7 2.4.2 Indo-European Languages ....................................................................................................................................... 7 2.4.3 Language Usage ............................................................................................................................................................. 8 2.5 Past Studies in South African Speech ........................................................................................................................ 8 2.5.1 AST (African Speech Technology)......................................................................................................................... 8 2.5.2 Meraka Institute ............................................................................................................................................................ 9 3 Experimental Design ................................................................................................................................................................. 10 3.1 Problem Statement ........................................................................................................................................................ 10 3.2 Dependent and Independent Variables ................................................................................................................ 10 3.3 Data Collection ................................................................................................................................................................. 10 3.4 Models ................................................................................................................................................................................. 11 3.5 Ethical and Legal Requirements .............................................................................................................................. 12 4 Implementation ........................................................................................................................................................................... 13 4.1 Creation of Speech Files ............................................................................................................................................... 14 4.2 Acoustic Analysis ............................................................................................................................................................ 14 4.3 HMM Definitions ............................................................................................................................................................. 15 4.4 HMM Training .................................................................................................................................................................. 17 4.5 Task Definition ................................................................................................................................................................. 19 4.6 Recognition Process ...................................................................................................................................................... 20 5 Testing and Results ................................................................................................................................................................... 22 5.1 Box Plots ............................................................................................................................................................................. 22 5.2 Histograms......................................................................................................................................................................... 23 i 5.3 Normality Test ................................................................................................................................................................. 25 5.4 Analysis of Variance ...................................................................................................................................................... 26 5.5 Comparison of Global and Individual Model Results ...................................................................................... 27 5.6 Discussion of Results ..................................................................................................................................................... 29 6 Conclusion ..................................................................................................................................................................................... 30 6.1 Conclusions ....................................................................................................................................................................... 30 6.2 Future Work ...................................................................................................................................................................... 30 7 References ..................................................................................................................................................................................... 31 8 Appendix ........................................................................................................................................................................................ 34 8.1 Training Algorithms ...................................................................................................................................................... 34 8.1.1 Viterbi Algorithm....................................................................................................................................................... 34 8.1.2 Baum-Welch Re-Estimation ................................................................................................................................. 35 L I IST OF LLUSTRATIONS Figures Figure 1: Speech Recogniton Implementation Overview ................................................................................................... 13 Figure 2: The Signal and Associated Labels for the Word "bus" ..................................................................................... 14 Figure 3: HMM state Diagram ......................................................................................................................................................... 15 Figure 4: Hidden Markov Model Prototype for “bus" .......................................................................................................... 16 Figure 5: Hidden Markov Model for “bus” After Re-Estimation ...................................................................................... 18 Figure 6: Dictionary Used by the Recognizer........................................................................................................................... 19 Figure 7: Grammar Used by the Recognizer............................................................................................................................. 20 Figure 8: Reference Master Label File Figure 9: Master Macro File ............................................................... 21 Figure 10: Recorded Master Label File ....................................................................................................................................... 21 Figure 11: Box Plot of Accuracy by Accent and Model......................................................................................................... 23 Figure 12: Histogram of English Model ...................................................................................................................................... 24 Figure 13: Histrogram of Accented Model ................................................................................................................................ 24 Figure 14: Normality Test – English Model .............................................................................................................................. 25 Figure 15: Normality Test - Accented Model ........................................................................................................................... 26 Figure 16: Histogram of Individual Accent Models ............................................................................................................... 28 Figure 17: Normality Test – Individual Accent Models ....................................................................................................... 28 Tables Table 1: The 36 Words Collected .................................................................................................................................................. 11 Table 2: Nested ANOVA - Accuracy versus Model, Accent and Speaker ...................................................................... 27 Table 3: General Linear Model ....................................................................................................................................................... 29 ii 1 I NTRODUCTION Speech Recognition is a difficult problem as accuracy levels can be affected by a number of different factors for example the tone, pitch and volume of a speaker’s voice, the age and gender of a speaker, the speed at which a word is spoken, background noise and the quality of the recording equipment. This problem becomes even greater when considering speaker independent speech recognition whereby the recognition system is required to work for speakers other than those used to train the system. To complicate this problem further, the accent in which a word is spoken may also affect the system’s ability to recognize a word. There are 11 official South African Languages: English, Afrikaans, isiNdebele, isiXhosa, isiZulu, Sepedi, Sesotho, Setswana, siSwati, Tshivenda, Xitsonga. Thus, there is a high variability in the pronunciation of English words especially in second (or more) language English speakers. This suggests that any speech recognition software produced for South African users must be able to deal with a number of different South African accents. However for South African Languages there are acute deficiencies in our modeling abilities [9]. Ideally software produced for South African users should cater for all 11 official languages and not just the pronunciation of English words by these speakers. However for the purpose of this research only the pronunciation of English words will be considered. A problem which occurs when training models for a number of different accent groups is that of finding the ideal number of training signals. In speech technology there is a tradeoff between effort and accuracy [6]. Accurate systems need large amounts of speech data which require many people/hours to obtain. If there are too few speakers used for training, the system may not be able to recognize the words spoken by other speakers i.e. the system would not be speaker independent. However, if there are too many variations in the speaker’s voices it may be difficult for the speech models to converge. Much research on speech recognition has been carried out in America, UK and China. However, the research on speech recognition in South Africa has mostly been limited to that of Stellenbosch University and the Meraka Institute. The availability of speech databases of South African voices is also limited. Despite this, there are many possibilities for speech recognition applications in South Africa as part of an Information and Communication Technology (ICT) for Development initiative. For example speech technology can be used to create learning systems to improve literacy levels, to create voice activated menus for mobile phones which can be used by the blind or as an aid in filling out forms for home affairs. However all of these suggested applications require speech recognition systems with high accuracy levels. Thus far, speech recognition systems have not been able to reach high accuracy levels for independent speakers. It is therefore necessary to research ways in improving speech recognition accuracy. This report will investigate the impact on accuracy levels of a speech recognition system for speakers with Afrikaans, Black language, Cape Coloured and Indian accents where the recognizer has been trained with these accent groups. 1 In Section 2, the background of speech recognition and other relevant topics are discussed. Section 3 will describe the design of the experiment conducted. The implementation carried out will be explained in Section 4 and the results of testing analyzed in Section 5. Conclusions and implications of these results will be discussed in Section 6 as well as suggestions for future research. 2 2 B ACKGROUND 2.1 SPEECH RECOGNITION The main goal of speech recognition is to provide a means whereby people can speak to machines with the same ease with which they speak to each other [17]. It is necessary to distinguish between the concepts of speech recognition and voice recognition. Speech recognition can be seen as a conversion of speech signals into symbols representing words [17], [20]. Voice recognition is to identify or recognize an individual speaker [30]. This report looks into speech recognition and will not address voice recognition. Recognition can either be in terms of isolated words or continuous speech [3], [25]. Past studies have focused mainly on continuous speech recognition [3], [20], [24], or both continuous speech and isolated word recognition [1], [19]. Isolated words are significantly easier to recognize than continuous speech [1]. The speech signal is broken up into units of speech [20]. Choosing whole words as units of speech may be practical and could simplify the process [25]. However, particularly for continuous speech, recognition needs to be accurate on a phoneme level especially in terms of the boundary times of vowel phonemes [33]. Phonemes have been adopted as the basic speech units for most speech research [20], [23], [24], [29], [33]. 2.1.1 BASIC SPEECH AND PHONOLOGY [30] Words can be distinguished from each other by the way in which they are pronounced. Changing a sound slightly can change the meaning of a word completely. This idea is known as contrasts. For example the consonants “k” and “g” can be chosen to distinguish between ‘cane’ and “gain”. It is important to note that the distinction between these sounds is significant in English. However it is not necessarily true for other languages. When learning English a speaker will need to learn to differentiate between these sounds. This can cause confusion to 2nd or 3rd language speakers. Sounds used contrastively are called phonemes (the Greek word “phone” means “sound”). The sounds of phonemes can be produced by using different aspects of the vocal tract. For example using lips, teeth, tongue (in terms of the tip, blade, body or root), nasal cavity etc effects the sound produced. Different languages may rely more heavily on a particular part of the vocal tract in comparison to other languages. For example Afrikaans is more of a guttural (using the pharynx wall or the larynx) language than English. This may affect how an Afrikaans speaker pronounces English words. Variant pronunciations of contrastively used sounds are also possible. Variant pronunciations are called allophones of a phoneme. The differences in allophones can be very slight and difficult to recognize when looking only at a speech signal. It is due to allophones that the sounds in the words “pan”, tan” and “can” differ from “span”, “Stan” and “scan”. The sounds “p”, “t” and “k” are called plosives and can be accompanied by a puff of air or aspiration as in “pan”, ”tan” and “can”. However, the aspiration is not present in words beginning with “s”. 3 Another phenomenon which the above example highlights is that of coarticulation. The articulation of one sound may have an uncontrollable effect on the articulation of neighbouring sounds. Once again, this is very difficult to pick up by looking at a speech signal both manually or by a speech recognizer. Stress refers to the force at which syllables are pronounced. There can be patterns of stressed and unstressed syllables. This is known as rhythm. Intonation is closely linked to these two concepts and refers to the change in pitch of the voice throughout and utterance. Stress, rhythm and intonation together are called prosody. The usage of stress, rhythm and intonation varies from one language to the next. Some languages use different tunes to differentiate between individual words, these are called tone languages. This is not the case for English however isiZulu and isiXhosa are tonal languages [9]. 2.1.2 METHODS Useful methods in speech recognition include Hidden Markov Models (HMMs) and neural networks [26]. These methods can be improved on by integrating them with maximum likelihood [3], sparse coding [29] and common sense [18] methods. HIDDEN MARKOV MODELS HMMs are a highly popular method in speech recognition [25]. The HMM methodology casts the speech recognition problem as that of a search for the best path through a weighted, directed graph [22]. However, the HMMs need a large number of states in order to achieve accuracy which then leads to a high computation cost [33]. Hidden Markov Models will be explained in further detail in Section 2.2. NEURAL NETWORKS An artificial intelligence approach to speech recognition is possible by studying neural networks [26]. Neural networks are a popular method in speech recognition second to HMMs [24]. It is believed that neural networks may have the ability to optimally adjust the parameters from the training data and can be employed to minimize speaker variation effects for speaker independent speech recognition [10]. However, neural networks have not shown significant improvement over HMM algorithms [1]. MAXIMUM LIKELIHOOD Maximum likelihood is a statistical method and the standard criterion for estimation of HMM parameters [3], [10], [12], [23], [28], [31]. A linguistic decoder is used to compute the likelihood of each sentence in a language and then to find the most likely [3]. The likelihood is the probability of that path being chosen [3]. In [3] the maximum likelihood method in continuous speech recognition is focused on in detail. SPARSE CODING [29] Sparse coding is an efficient way of coding information as very few elements are active. The idea comes from looking at how the brain functions. Neurons can be seen as binary elements, either silent or active. Several neurons can form an activity pattern over time. When neurons are very active it is known as dense coding. In contrast, local coding has very few active neurons. The brain compromises between dense and local coding and this is called sparse coding. In [29] this approach is used to show how continuous speech systems can be performed using sparse coding. After comparing the performance of a sparse coding system with other systems 4 including HMM-based speech recognition [29] found that the 15% word error rate for HMM was only slightly better than the 19% word error rate achieved using sparse coding. However, there is a trade off as training using sparse coding is time consuming. “CALM INCENSE” – COMMON SENSE Although many words are phonetically similar, contextually they are very different. A ConceptNet can be used to add everyday knowledge to the words [18]. It is then possible to disambiguate between phonetically similar words by choosing the word that makes sense in context [18]. It was found that by looking at the word’s contextual meaning and using semantics and common sense, errors in recognition can be greatly reduced [18]. 2.1.3 ACCENTS AND DIALECTS There is high variability in speech due to dialects, pronunciation and speaking style [1], [33]. Different pronunciations will have different phonetic spelling and therefore speakers with different dialects are likely to find systems unusable due to high error rates [20]. Pronunciation was an important issue in [33] as the system was being used to teach English as a second language. It is likely in those situations that users’ may mispronounce words. In this situation, the system must include alternate, acceptable pronunciations [33]. There has been much research for Chinese dialects and tones in Asian languages [5], [16]. Efficient speech recognition must be able to cope with different accents and speaking styles [24]. Some toolkits such as HTK use pronunciation dictionaries which hold more than one pronunciation associated with each word [10]. IDEA (International Dialects of English Archive) was created in 1997 and holds an archive of downloadable recording of many dialects of English including South African English. These recordings were found to be inappropriate for the research covered in this paper as the speech data was limited and of continuous speech. The AST (African Speech Technology) has also created a speech database of South African accents and is discussed in Section 2.5.1 below. A study of accents and the pronunciations in South African Bantu languages was completed in [35]. Although there have been many similar studies of South African languages in speech recognition, there is limited research on the effects of accents on speech recognition accuracy. 2.1.4 PERFORMANCE Performance in speech recognition is measured in terms of accuracy and speed. Speech recognition performance has not yet reached a stage where it can be considered on the same level as human performance as machine error rates are more than an order of magnitude greater than human error rates [17], [19]. Due to low performance of automatic speech recognition systems it is difficult to extend their application to new fields [13]. Speaker variation is one of the major sources of error and can cause computational complexity to increase substantially [10]. Some believe that most improvements in performance in speech recognition are due to advances in microelectronics and are independent of work in speech technology [17]. However although error rates are considered too high for the use in many applications, development of speech recognition systems is still an ongoing process [10]. It is possible for parameters to be “pruned” in the decoding stage in order to decrease computation time with moderate degradation of the word error rate [10]. In order to improve performance in speech recognition, a paradigm shift may be necessary from signal transcription to message comprehension, where the system not only recognizes words, but their meaning in context [17]. 5 Performance related to isolated words and command recognition is significantly greater than that of continuous speech recognition [1]. This increase in performance for isolated words is due to a number of factors. For example there is no need to worry about word boundary locations in isolated word recognition [34]. For continuous speech, word boundaries are a problem as these boundaries may be blurred [1]. Word recognition with a limited vocabulary has simpler grammars and requires less speech data. In addition the words have equal probabilities of being spoken [29]. This paper will look specifically at isolated words, as recognition rates are higher than those of continuous speech and yet there are many applications which can be built around isolated word recognition. 2.1.5 APPLICATIONS In order for applications based on speech recognition to be possible, it is necessary for the recognizer to provide a measurable benefit to users, to be user-friendly and accurate and to respond in real time [26]. Speech recognition can have applications in office/business systems, manufacture, telecommunications and healthcare [26]. The use of speech recognition technology in Healthcare was studied by [7]. It was found that speech applications were used for dictation, speech-based interactive systems, speech controlled equipment and language interpretation systems [7]. A speech recognizer was used for computer aided instruction in order to teach English second language [33]. The system helped students to practice and improve their spoken English [33]. Speech recognition has been used to convert telephone speech to text [10], [31]. However, transcription of conversational telephone speech is a highly challenging task in speech recognition and state-of-the-art systems achieve word error rates of 30%- 40% [10]. It is possible to use speech recognition to summarize voice mail messages to text [15]. In this case the system is also required to identify the important information in a message [15]. 2.1.6 LIMITATIONS AND CHALLENGES There were many challenges identified in the literature. Speech recognition systems will need to be improved in terms of ease of use [1]. Speech recognition systems for example dictation programs are often frustrating and unintuitive to use. For this reason there is a need to improve speech recognition in terms of human computer interaction. As there are so many limitations on the accuracy of speech systems due to noise, speaker and recording environment, there is also a need to create robust systems which can achieve high performance even with these variations. [1], [20], [22]. Systems will also need to effectively separate foreground and background speech [31] as background noise can easily compromise the quality of speech signals. An optimal compromise should be found among memory size, memory bandwidth and computation power [11]. Systems should be able to deal with distant speakers and overlapping speech [31]. A need for a breathing model was also identified [11]. A challenge identified by [6] is that of finding a compromise between recognition accuracy and amount of speech data required. 6

Description: