One-Way Independent ANOVA PDF

Preview One-Way Independent ANOVA

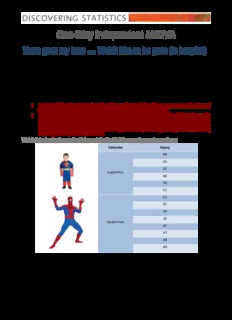

One-Way Independent ANOVA There goes my hero …. Watch him as he goes (to hospital) Children wearing superhero costumes are more likely to harm themselves because of the unrealistic impression of invincibility that these costumes could create: For example, children have reported to hospital with severe injuries because of trying ‘to initiate flight without having planned for landing strategies’ (Davies, Surridge, Hole, & Munro- Davies, 2007). I can relate to the imagined power that a costume bestows upon you; even now, I have been known to dress up as Fisher by donning a beard and glasses and trailing a goat around on a lead in the hope that it might make me more knowledgeable about statistics. Imagine we had data about the severity of injury (on a scale from 0, no injury, to 100, death) for children reporting to the emergency centre at hospitals and information on which superhero costume they were wearing (hero): Spiderman, superman, the hulk or a teenage mutant ninja turtle. The Data are in Table 1 and there are descriptive statistics in Output 1. The researcher hypothesized: • Costumes of ‘flying’ superheroes (i.e. That is, the ones that travel through the air: Superman and Spiderman) will lead to more severe injuries than non-flying ones (the Hulk and Ninja Turtles). • There will be a diminishing trend in injuries depending on the costume: Superman (most injuries because he flies), Spiderman (next highest injuries because although technically he doesn’t fly, he does climb buildings and throws himself about high up in the air), Hulk (doesn’t tend to fly about in the air much but does smash buildings and punch hard objects that would damage a child if they hit them)1, and Ninja Turtles (let’s face it, they engage in fairly twee Ninja routines). Table 1: Data showing the severity of injury sustained by 30 children wearing superhero costumes Costume Injury 69 32 85 Superman 66 58 52 51 31 58 20 Spiderman 47 37 49 40 1 Some of you might take issue with this because you probably think of the hulk as a fancy bit of CGI that leaps skyscrapers. However, the ‘proper’ hulk, that is, the one that was on TV during my childhood in the late 1970s (see YouTube) was in fact a real man with big muscles painted green. Make no mistake, he was way scarier than any CGI, but he did not jump over skyscrapers. © Prof. Andy Field, 2016 www.discoveringstatistics.com Page 1 26 43 10 45 Hulk 30 35 53 41 18 18 30 30 Ninja Turtle 30 41 18 25 Generating Contrasts Based on what you learnt in the lecture, remember that we need to follow some rules to generate appropriate contrasts: • Rule 1: Choose sensible comparisons. Remember that you want to compare only two chunks of variation and that if a group is singled out in one comparison, that group should be excluded from any subsequent contrasts. • Rule 2: Groups coded with positive weights will be compared against groups coded with negative weights. So, assign one chunk of variation positive weights and the opposite chunk negative weights. • Rule 3: The sum of weights for a comparison should be zero. If you add up the weights for a given contrast the result should be zero. • Rule 4: If a group is not involved in a comparison, automatically assign it a weight of 0. If we give a group a weight of 0 then this eliminates that group from all calculations • Rule 5: For a given contrast, the weights assigned to the group(s) in one chunk of variation should be equal to the number of groups in the opposite chunk of variation. Figure 1 shows how we would apply Rule 1 to the Superhero example. We’re told that we want to compare flying superheroes (i.e. Superman and Spiderman) against non-flying ones (the Hulk and Ninja Turtles) in the first instance. That will be contrast 1. However, because each of these chunks is made up of two groups (e.g., the flying superheroes chunk comprises both children wearing Spiderman and those wearing Superman costumes), we need a second and third contrast that breaks each of these chunks down into their constituent parts. To get the weights (Table 2), we apply rules 2 to 5. Contrast 1 compares flying (Superman, Spiderman) to non-flying (Hulk, Turtle) superheroes. Each chunk contains two groups, so the weights for the opposite chunks are both 2. We assign one chunk positive weights and the other negative weights (in Table 2 I’ve chosen the flying superheroes to have positive weights, but you could do it the other way around). Contrast two then compares the two flying superheroes to each other. First we assign both non-flying superheroes a 0 weight to remove them from the contrast. We’re left with two chunks: one containing the Superman group and the other containing the Spiderman group. Each chunk contains one group, so the weights for the opposite chunks are both 1. We assign one chunk positive weights and the other negative weights (in Table 2 I’ve chosen to give Superman the positive weight, but you could do it the other way around). © Prof. Andy Field, 2016 www.discoveringstatistics.com Page 2 SSM Variance in injury severity explained by different costumes 'Flying' Superheroes Non-'Flying' Superman and Superheroes Contrast 1 Spiderman Hulk and Turtle Superman Spiderman Contrast 2 Ninja Hulk Contrast 3 Turtle Figure 1: Contrasts for the Superman data Table 2: Weights for the contrasts in Figure 1 Contrast Superman Spiderman Hulk Ninja Turtle Contrast 1 2 2 -2 -2 Contrast 2 1 -1 0 0 Contrast 3 0 0 1 -1 Finally, Contrast three compares the two non-flying superheroes to each other. First we assign both flying superheroes a 0 weight to remove them from the contrast. We’re left with two chunks: one containing the Hulk group and the other containing the Turtle group. Each chunk contains one group, so the weights for the opposite chunks are both 1. We assign one chunk positive weights and the other negative weights (in Table 2 I’ve chosen to give the Hulk the positive weight, but you could do it the other way around). Note that if we add the weights we get 0 in each case (rule 3): 2 + 2 + (- 2) + (- 2) = 0 (Contrast 1); 1 + (- 1) + 0 + 0 = 0 (Contrast 2); and 0 + 0 + 1 + (- 1) = 0 (Contrast 3). Effect Sizes: Cohen’s d We discussed earlier in the module that it can be useful not just to rely on significance testing but also to quantify the effects in which we’re interested. When looking at differences between means, a useful measure of effect size is Cohen’s d. This statistic is very easy to understand because it is the difference between two means divided by some estimate of the standard deviation of those means: 𝑋𝑋$−𝑋𝑋& I have put a hat on the d to remind us that we’re rea𝑑𝑑lly= interested in the effect size in the population, but because we 𝑠𝑠 can’t measure that directly, we estimate it from the samples (The hat means ‘estimate of’). By dividing by the standard deviation we are expressing the difference in means in standard deviation units (a bit like a z –score). The standard deviation is a measure of ‘error’ or ‘noise’ in the data, so d is effectively a signal-to-noise ratio. However, if we’re using © Prof. Andy Field, 2016 www.discoveringstatistics.com Page 3 two means, then there will be a standard deviation associated with each of them so which one should we use? There are three choices: 1. If one of the group is a control group it makes sense to use that groups standard deviation to compute d (the argument being that the experimental manipulation might affect the standard deviation of the experimental group, so the control group SD is a ‘purer’ measure of natural variation in scores) 2. Sometimes we assume that group variances (and therefore standard deviations) are equal (homogeneity of variance) and if they are we can pick a standard deviation from either of the groups because it won’t matter. 3. We use what’s known as a ‘pooled estimate’, which is the weighted average of the two group variances. This is given by the following equation: & & 𝑁𝑁$−1 𝑠𝑠$ + 𝑁𝑁&−1 𝑠𝑠& 𝑠𝑠( = 𝑁𝑁$+𝑁𝑁&−2 Let’s look at an example. Say we wanted to estimate d for the effect of Superman costumes compared to Ninja Turtle costumes. Output 1 shows us the means, sample size and standard deviation for these two groups: • Superman: M = 60.33, N = 6, s = 17.85, s2 = 318.62 • Ninja Turtle: M = 26.25, N = 8, s = 8.16, s2 = 66.50 Neither group is a natural control (you would need a ‘no costume’ condition really), but if we decided that Ninja Turtle (for some reason) was a control (perhaps because Turtles don’t fly but supermen do) then d is simply: 𝑋𝑋-.(/012/3456−𝑋𝑋7834086 60.33−26.25 𝑑𝑑 = = =4.18 In other words, the mean injury severity for p𝑠𝑠e78o3p4l0e8 6wearing superma8n. 1co6stumes is 4 standard deviations greater than for those wearing Ninja Turtle costumes. This is a pretty huge effect. Cohen (1988, 1992) has made some widely used suggestions about what constitutes a large or small effect: d = 0.2 (small), 0.5 (medium) and 0.8 (large). Be careful with these benchmarks because they encourage the kind of lazy thinking that we were trying to avoid and ignore the context of the effect such as the measurement instruments and general norms in a particular research area. Let’s have a look at using the pooled estimate. & & 6−1 17.85 + 8−1 8.16 1593.11+466.10 𝑠𝑠( = = = 171.60=13.10 When the group standard deviations are6 d+iffe8r−en2t, this pooled estimate ca1n2 be useful; however, it changes the meaning of d because we’re now comparing the difference between means against all of the background ‘noise’ in the measure, not just the noise that you would expect to find in normal circumstances. Using this estimate of the standard deviation we get: 𝑋𝑋-.(/012/3456−𝑋𝑋7834086 60.33−26.25 Notice that d is smaller now; the𝑑𝑑 in=jury severi𝑠𝑠t7y8 f3o4r0 8S6uperman =costum1e3s. 1is0 about= 2 2st.6an0dard deviations greater than for Ninja Turtle Costumes (which is still pretty big) SELF-TEST: Compute Cohen’s d for the effect of Superman costumes on injury severity compared to Hulk and Spiderman costumes. Try using both the standard deviation of the control (the non- Superman costume) and also the pooled estimate. (Answers at the end of the handout) Running One-Way Independent ANOVA on SPSS Let’s conduct an ANOVA on the injury data. We need to enter the data into the data editor using a coding variable specifying to which of the four groups each score belongs. We need to do this because we have used a between-group design (i.e. different people in each costume). So, the data must be entered in two columns (one called hero which © Prof. Andy Field, 2016 www.discoveringstatistics.com Page 4 specifies the costume worn and one called injury which indicates the severity of injury each child sustained). You can code the variable hero any way you wish but I recommend something simple such as 1 = Superman, 2 = Spiderman, 3 = The Hulk, and 4 = Ninja Turtle. ® Save these data in a file called Superhero.sav. ® Independent Variables are sometimes referred to as Factors. To conduct one-way ANOVA we have to first access the main dialogue box by selecting (Figure 1). This dialogue box has a space where you can list one or more dependent variables and a second space to specify a grouping variable, or factor. Factor is another term for independent variable. For the injury data we need select only injury from the variable list and transfer it to the box labelled Dependent List by clicking on (or dragging it there). Then select the grouping variable hero and transfer it to the box labelled Factor by clicking on (or dragging it). If you click on you access the dialog box that allows you to conduct planned comparisons, and by clicking on you access the post hoc tests dialog box. These two options will be explained during the next practical class. Figure 2: Main dialogue box for one-way ANOVA Planned Comparisons Using SPSS Click on to access the dialogue box in Figure 2, which has two sections. The first section is for specifying trend analyses. If you want to test for trends in the data then tick the box labelled Polynomial and select the degree of polynomial you would like. The Superhero data has four groups and so the highest degree of trend there can be is a cubic trend (see Field, 2013 Chapter 11). We predicted that the injuries will decrease in this order: Superman > Spiderman > Hulk > Ninja Turtle. This could be a linear trend, or possibly quadratic (a curved descending trend) but not cubic (because we’re not predicting that injuries go down and then up. It is important from the point of view of trend analysis that we have coded the grouping variable in a meaningful order. To detect a meaningful trend, we need to have coded the groups in the order in which we expect the mean injuries to descend; that is, Superman, Spiderman, Hulk, Ninja Turtle. We have done this by coding the Superman group with the lowest value 1, Spiderman with the next largest value (2), the Hulk with the next largest value (3), and the Ninja Turtle group with the largest coding value of 4. If we coded the groups differently, this would influence both whether a trend is detected, and if by chance a trend is detected whether it is meaningful. For the superhero we predict at most a quadratic trend (see above), so select the polynomial option ( ), and then select a quadratic degree by clicking on and then selecting Quadratic (the drop-down list should now say ) — see Figure 3. If a quadratic trend is selected SPSS will test for both linear and quadratic trends. To conduct planned comparisons, the first step is to decide which comparisons you want to do and then what weights must be assigned to each group for each of the contrasts (see Field, 2013, Chapter 11). We saw earlier in this handout what sensible contrasts would be, and what weights to give them (see Figure 1 and Table 2). To enter the weights in Table 2 we use the lower part of the dialogue box in Figure 3. © Prof. Andy Field, 2016 www.discoveringstatistics.com Page 5 Figure 3: Dialog box for conducting planned comparisons Entering Contrast 1 We will specify contrast 1 first. It is important to make sure that you enter the correct weighting for each group, so you should remember that the first weight that you enter should be the weight for the first group (that is, the group coded with the lowest value in the data editor). For the superhero data, the group coded with the lowest value was the Superman group (which had a code of 1) and so we should enter the weighting for this group first. Click in the box labelled Coefficients with the mouse and then type ‘2’ in this box and click on . Next, we input the weight for the second group, which was the Spiderman group (because this group was coded in the data editor with the second highest value). Click in the box labelled Coefficients with the mouse and then type ‘2’ in this box and click on . Next, we input the weight for Hulk group (because it had the next largest code in the data editor), so click in the box labelled Coefficients with the mouse and type ‘-2’ and click on . Finally, we input the code for the last group (the one with the largest code in the data editor), which was the Ninja Turtle group — click in the box labelled Coefficients with the mouse and type ‘-2’ and click on . The box should now look like Figure 4 (left). Figure 4: Contrasts dialog box completed for the three contrasts of the Superhero data Once you have input the weightings you can change or remove any one of them by using the mouse to select the weight that you want to change. The weight will then appear in the box labelled Coefficients where you can type in a new weight and then click on . Alternatively, you can click on any of the weights and remove it completely by clicking . Underneath the weights SPSS calculates the coefficient total, should equal zero (If you’ve used the correct © Prof. Andy Field, 2016 www.discoveringstatistics.com Page 6 weights). If the coefficient number is anything other than zero you should go back and check that the contrasts you have planned make sense and that you have followed the appropriate rules for assigning weights. Entering Contrast 2 Once you have specified the first contrast, click on . The weightings that you have just entered will disappear and the dialogue box will now read contrast 2 of 2. The weights for contrast 2 should be: 1 (Superman group), -1 (Spiderman group), 0 (Hulk group) and 0 (Ninja Turtle group). We can specify this contrast as before. Remembering that the first weight we enter will be for the Superman group, we must enter the value 1 as the first weight. Click in the box labelled Coefficients with the mouse and then type ‘1’ and click on . Next, we need to input the weight for the Spiderman group by clicking in the box labelled Coefficients and then typing ‘-1’ and clicking on . Then the Hulk group: click in the box labelled Coefficients, type ‘0’ and click on . Finally, we need to input the weight for the Ninja Turtle group by clicking in the box labelled Coefficients and then typing ‘0’ and clicking on (see Figure 4, middle). Entering Contrast 3 Click on , and you can enter the weights for the final contrast. The dialogue box will now read contrast 3 of 3. The weights for contrast 3 should be: 0 (Superman group), 0 (Spiderman group), 1 (Hulk group) and -1 (Ninja Turtle group). We can specify this contrast as before. Remembering that the first weight we enter will be for the Superman group, we must enter the value 0 as the first weight. Click in the box labelled Coefficients, type ‘0’ and click on . Next, we input the weight for the Spiderman group by clicking in the box labelled Coefficients and then typing ‘0’ and clicking on . Then the Hulk group: click in the box labelled Coefficients, type ‘1’ and click on . Finally, we input the weight for the Ninja Turtle group by clicking in the box labelled Coefficients, typing ‘-1’ and clicking on (see Figure 4, right). When all of the planned contrasts have been specified click on to return to the main dialogue box. Post Hoc Tests in SPSS Normally if we have done planned comparisons we should not do post hoc tests (because we have already tested the hypotheses of interest). Likewise, if we choose to conduct post hoc tests then planned contrasts are unnecessary (because we have no hypotheses to test). However, for the sake of space we will conduct some post hoc tests on the superhero data. Click on in the main dialogue box to access the post hoc tests dialogue box (Figure 5). The choice of comparison procedure depends on the exact situation you have and whether you want strict control over the familywise error rate or greater statistical power. I have drawn some general guidelines: Field (2013) recommends: ® When you have equal sample sizes and you are confident that your population variances are similar then use R-E-G-W-Q or Tukey because both have good power and tight control over the Type I error rate. ® If sample sizes are slightly different then use Gabriel’s procedure because it has greater power, but if sample sizes are very different use Hochberg’s GT2. ® If there is any doubt that the population variances are equal then use the Games-Howell procedure because this seems to generally offer the best performance. I recommend running the Games-Howell procedure in addition to any other tests you might select because of the uncertainty of knowing whether the population variances are equivalent. For the superhero data there are slightly unequal sample sizes and so we will use Gabriel’s test (see Tip above). When the completed dialogue box looks like Figure 5 click on to return to the main dialogue box. © Prof. Andy Field, 2016 www.discoveringstatistics.com Page 7 Figure 5: Dialogue box for specifying post hoc tests Options The additional options for one-way ANOVA are fairly straightforward. The dialog box to access these options can be obtained by clicking on . First you can ask for some descriptive statistics, which will display a table of the means, standard deviations, standard errors, ranges and confidence intervals for the means of each group. This is a useful option to select because it assists in interpreting the final results. You can also select Homogeneity-of-variance tests. Earlier in the module we saw that there is an assumption that the variances of the groups are equal and selecting this option tests this assumption using Levene’s test (see your handout on bias). SPSS offers us two alternative versions of the F-ratio: the Brown-Forsythe F (1974), and the Welch F (1951). These alternative Fs can be used if the homogeneity of variance assumption is broken. If you’re interested in the details of these corrections then see Field (2013), but if you’ve got better things to do with your life then take my word for it that they’re worth selecting just in case the assumption is broken. You can also select a Means plot which will produce a line graph of the means. Again, this option can be useful for finding general trends in the data. When you have selected the appropriate options, click on to return to the main dialog Figure 6: Options for One-Way ANOVA box. Click on in the main dialog box to run the analysis. Bootstrapping Also in the main dialog box is the alluring button. We have seen in the module that bootstrapping is a good way to overcome bias, and this button glistens and tempts us with the promise of untold riches, like a diamond in a bull’s rectum. However, if you use bootstrapping it’ll be as disappointing as if you reached for that diamond only to discover that it’s a piece of glass. You might, not unreasonably, think that if you select bootstrapping it’d do a nice bootstrap of the F-statistic for you. It won’t. It will bootstrap confidence intervals around the means (if you ask for descriptive statistics), contrasts and differences between means (i.e., the post hoc tests). This, of course, can be useful, but the main test won’t be bootstrapped. Output from One-Way ANOVA Descriptive Statistics Figure 7 shows the ‘means plot’ that we asked SPSS for. A few important things to note are: © Prof. Andy Field, 2016 www.discoveringstatistics.com Page 8 û It looks horrible. û SPSS has scaled the y-axis to make the means look as different as humanly possible. Think back to week 1 when we learnt that it was very bad to scale your graph to maximise group differences – SPSS has not read my handoutJ û There are no error bars: the graph just isn’t very informative because we aren’t given confidence intervals for the mean. Paste something like this into one of your lab reports and watch your tutor recoil in horror and your mark plummet! The moral is, never let SPSS do your graphs for youJ ® Using what you learnt in week one draw an error bar chart of the data. (Your chart should ideally look like Figure 8). Figure 7: Crap graph automatically produced by SPSS Figure 8: Nicely edited error bar chart of the injury data Figure 8 shows an error bar chart of the injury data. The means indicate that some superhero costumes do result in more severe injuries than others. Notably, the Ninja Turtle costume seems to result in less severe injuries and the Superman costume results in most severe injuries. The error bars (the I shapes) show the 95% confidence interval around the mean. ® Think back to the start of the module, what does a confidence interval represent? If we were to take 100 samples from the same population, the true mean (the mean of the population) would lie somewhere between the top and bottom of that bar in 95 of those samples. In other words, these are the limits between which the population value for the mean injury severity in each group will (probably) lie. If these bars do not overlap then we expect to get a significant difference between means because it shows that the population means of those two samples are likely to be different (they don’t fall within the same limits). So, for example, we can tell that Ninja Turtle related injuries are likely to be less severe than those of children wearing superman costumes (the error bars don’t overlap) and Spiderman costumes (only a small amount of overlap). The table of descriptive statistics verifies what the graph shows: that the injuries for Superman costumes were most severe, and for ninja turtle costumes were least severe. This table also provides the confidence intervals upon which the error bars were based. © Prof. Andy Field, 2016 www.discoveringstatistics.com Page 9 Output 1 Levene's test Levene’s test (think back to your lecture and handout on bias) tests the null hypothesis that the variances of the groups are the same. In this case Levene’s test is testing whether the variances of the four groups are significantly different. ® If Levene’s test is significant (i.e. the value of sig. is less than .05) then we can conclude that the variances are significantly different. This would mean that we had violated one of the assumptions of ANOVA and we would have to take steps to rectify this matters by (1) transforming all of the data (see your handout on bias), (2) bootstrapping (not implemented in SPSS for ANOVA, or (3) using a corrected test (see below). Remember that how we interpret Levene’s test depends on the size of sample we have (see the handout on bias). Output 2 For these data the variances are relatively similar (hence the high probability value). Typically people would interpret this result as meaning that we can assume homogeneity of variance (because the observed p-value of .459 is greater than .05). However, our sample size is fairly small (some groups had only 6 participants). The small sample (per group) will limit the power of Levene’s test to detect differences between the variances. We could also look at the variance ratio. The smallest variance was for the Ninja Turtle costume (8.162 = 66.59) and the largest was for Superman costumes (17.852 = 318.62). The ratio of these values is 318.62/66.59 = 4.78. In other words, the largest variance is almost five time larger than the smallest variance. This difference is quite substantial. Therefore, we might reasonably assume that variances are not homogenous. For the main ANOVA, we selected two procedures (Brown-Forsythe and Welch) that should be accurate when homogeneity of variance is not true. So, we should perhaps inspect these F-values in the main analysis. We might also choose a method of post hoc test that does not rely on the assumption of equal variances (e.g., the Games-Howell procedure). The Main ANOVA Output 3 shows the main ANOVA summary table. The output you will see is the table at the bottom of Output 3, this is a more complicated table than a simple ANOVA table because we asked for a trend analysis of the means (by selecting the select the polynomial option in Figure 3). The top of Output 3 shows what you would see if you hadn’t done the trend analysis just note that the Between Groups, Within Groups and Total rows in both tables are the same — it’s just that the bottom table decomposes the Between Groups effect into linear and quadratic trends. © Prof. Andy Field, 2016 www.discoveringstatistics.com Page 10

Description: